Metaprogramming¶

Metaprogramming is one of the most complex and powerful approaches to programming in Python. Metaprogramming tools and techniques have evolved with Python; so, before we dive into this topic, it is important for you to know all the elements of modern Python syntax well.

1. What is metaprogramming¶

Maybe there is a good academic definition of metaprogramming that we can cite here, but this is more about good software craftsmanship than about computer science theory. This is why we will use the following simple definition:

“Metaprogramming is a technique of writing computer programs that can treat themselves as data, so they can introspect, generate, and/or modify itself while running.”

Using this definition, we can distinguish between two major approaches to metaprogramming in Python.

The first approach concentrates on the language’s ability to introspect its basic elements, such as functions, classes, or types, and to create or modify them on the fly. Python really provides a lot of tools in this area. This feature of the Python language is used by IDEs (such as PyCharm) to provide real-time code analysis and name suggestions. The easiest possible metaprogramming tools in Python that utilized language introspection are decorators that allow for adding extra functionality to the existing functions, methods, or classes. Next are special methods of classes that allow you to interfere with class instance process creation. The most powerful are metaclasses, which allow programmers to even completely redesign Python’s implementation of object-oriented programming.

The second approach allows programmers to work directly with code, either in its raw (plain text) format or in more programmatically accessible abstract syntax tree (AST) form. This second approach is, of course, more complicated and difficult to work with but allows for really extraordinary things, such as extending Python’s language syntax or even creating your own domain-specific language (DSL).

2. Decorators¶

The decorator syntax was already explained, as a syntactic sugar for the following simple pattern:

def decorated_function():

pass

decorated_function = some_decorator(decorated_function)

This verbose form of function decoration clearly shows what the decorator does. It takes a function object and modifies it at runtime. As a result, a new function (or anything else) is created based on the previous function object with the same name. This decoration may be a complex operation that performs some code introspection or decorated function to give different results depending on how the original function was implemented. All this means is that decorators can be considered as a metaprogramming tool.

This is good news. The basics of decorators are relatively easy to grasp and in most cases make code shorter, easier to read, and also cheaper to maintain. Other metaprogramming tools that are available in Python are more difficult to understand and master. Also, they might not make the code simple at all.

2.1. Class decorators¶

One of the lesser known syntax features of Python are the class decorators. Their syntax

and implementation is exactly the same as function decorators. The only difference is that they are

expected to return a class instead of the function object. Here is an example class decorator

that modifies the __repr__() method to return the printable object representation, which

is shortened to some arbitrary number of characters:

def short_repr(cls):

cls.__repr__ = lambda self: super(cls, self).__repr__()[:8]

return cls

@short_repr

class ClassWithRelativelyLongName:

pass

The following is what you will see in the output:

>>> ClassWithRelativelyLongName()

<ClassWi

Of course, the preceding snippet is not an example of good code by any means. Still, it shows how multiple language features that are explained in the previous chapter can be used together, for example:

Not only instances but also class objects can be modified at runtime

Functions are descriptors too, so they can be added to the class at runtime because the actual method binding is performed on the attribute lookup as part of the descriptor protocol

The

super()call can be used outside of a class definition scope as long as proper arguments are providedFinally, class decorators can be used on class definitions

The other aspects of writing function decorators apply to the class decorators as well. Most importantly, they can use closures and be parametrized. Taking advantage of these facts, the previous example can be rewritten into the following more readable and maintainable form:

def parametrized_short_repr(max_width=8):

"""Parametrized decorator that shortens representation"""

def parametrized(cls):

"""Inner wrapper function that is actual decorator"""

class ShortlyRepresented(cls):

"""Subclass that provides decorated behavior"""

def __repr__(self):

return super().__repr__()[:max_width]

return ShortlyRepresented

return parametrized

The major drawback of using closures in class decorators this way is that the resulting

objects are no longer instances of the class that was decorated but instances of the subclass

that was created dynamically in the decorator function. Among others, this will affect the

class’s __name__ and __doc__ attributes, as follows:

@parametrized_short_repr(10)

class ClassWithLittleBitLongerLongName:

pass

Such usage of class decorators will result in the following changes to the class metadata:

>>> ClassWithLittleBitLongerLongName().__class__

<class 'ShortlyRepresented'>

>>> ClassWithLittleBitLongerLongName().__doc__

'Subclass that provides decorated behavior'

Unfortunately, this cannot be fixed as simply as we explained before. In class

decorators, you can’t simply use the additional wraps decorator to preserve the original

class type and metadata. This makes use of the class decorators in this form limited in some

circumstances. They can, for instance, break results of automated documentation

generation tools.

Still, despite this single caveat, class decorators are a simple and lightweight alternative to the popular mixin class pattern. Mixin in Python is a class that is not meant to be instantiated, but is instead used to provide some reusable API or functionality to other existing classes. Mixin classes are almost always added using multiple inheritance. Their usage usually takes the following form:

class SomeConcreteClass(MixinClass, SomeBaseClass):

pass

Mixins classes form a useful design pattern that is utilized in many libraries and frameworks. To name one, Django is an example framework that uses them extensively. While useful and popular, mixins can cause some trouble if not designed well, because, in most cases, they require the developer to rely on multiple inheritance. As we stated earlier, Python handles multiple inheritance relatively well, thanks to its clear MRO implementation. Anyway, try to avoid subclassing multiple classes if you can. Multiple inheritance makes code more complex and hard to reason about. This is why class decorators may be a good replacement for mixin classes.

4. Using __new__() for overriding instantiation¶

The special method __new__() is a static method that’s responsible for creating class

instances. It is special-cased, so there is no need to declare it as static using

the staticmethod decorator. This __new__(cls, [,...]) method is called prior to

the __init__() initialization method. Typically, the implementation of

overridden __new__() invokes its superclass version using super().__new__() with

suitable arguments and modifies the instance before returning it.

The following is an example class with the overridden __new__() method implementation

in order to count the number of class instances:

class InstanceCountingClass:

instances_created = 0

def __new__(cls, *args, **kwargs):

print('__new__() called with:', cls, args, kwargs)

instance = super().__new__(cls)

instance.number = cls.instances_created

cls.instances_created += 1

return instance

def __init__(self, attribute):

print('__init__() called with:', self, attribute)

self.attribute = attribute

Here is the log of the example interactive session that shows how our InstanceCountingClass implementation works:

>>> from instance_counting import InstanceCountingClass

>>> instance1 = InstanceCountingClass('abc')

__new__() called with: <class '__main__.InstanceCountingClass'> ('abc',) {}

__init__() called with: <__main__.InstanceCountingClass object at

0x101259e10> abc

>>> instance2 = InstanceCountingClass('xyz')

__new__() called with: <class '__main__.InstanceCountingClass'> ('xyz',) {}

__init__() called with: <__main__.InstanceCountingClass object at

0x101259dd8> xyz

>>> instance1.number, instance1.instances_created

(0, 2)

>>> instance2.number, instance2.instances_created

(1, 2)

The __new__() method should usually return an instance of the featured class, but it is

also possible for it to return other class instances. If this does happen (a different class

instance is returned), then the call to the __init__() method is skipped. This fact is useful

when there is a need to modify creation/initialization behavior of immutable class instances

like some of Python’s built-in types, as shown in the following code:

class NonZero(int):

def __new__(cls, value):

return super().__new__(cls, value) if value != 0 else None

def __init__(self, skipped_value):

# implementation of __init__ could be skipped in this case

# but it is left to present how it may be not called

print("__init__() called")

super().__init__()

Let’s review these in the following interactive session:

>>> type(NonZero(-12))

__init__() called

<class '__main__.NonZero'>

>>> type(NonZero(0))

<class 'NoneType'>

>>> NonZero(-3.123)

__init__() called

-3

So, when should we use __new__()? The answer is simple: only when __init__() is not

enough. One such case was already mentioned, that is, subclassing immutable built-in

Python types such as int, str, float, frozenset, and so on. This is because there was no

way to modify such an immutable object instance in the __init__() method once it was

created.

Some programmers can argue that __new__() may be useful for performing important

object initialization that may be missed if the user forgets to use

the super().__init__() call in the overridden initialization method. While it sounds

reasonable, this has a major drawback. With such an approach, it becomes harder for the

programmer to explicitly skip previous initialization steps if this is the already desired

behavior. It also breaks an unspoken rule of all initializations performed in __init__().

Because __new__() is not constrained to return the same class instance, it can be easily

abused. Irresponsible usage of this method might do a lot of harm to code readability, so it

should always be used carefully and backed with extensive documentation. Generally, it is

better to search for other solutions that may be available for the given problem, instead of

affecting object creation in a way that will break a basic programmers’ expectations. Even

overridden initialization of immutable types can be replaced with more predictable and

well-established design patterns like the Factory Method.

There is at least one aspect of Python programming where extensive usage of

the __new__() method is well justified. These are metaclasses.

5. Metaclasses¶

Metaclass is a Python feature that is considered by many as one of the most difficult things to understand in this language and thus avoided by a great number of developers. In reality, it is not as complicated as it sounds once you understand a few basic concepts. As a reward, knowing how to use metaclasses grants you the ability to do things that are not possible without them.

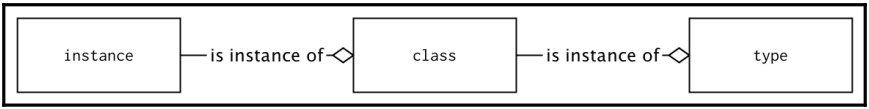

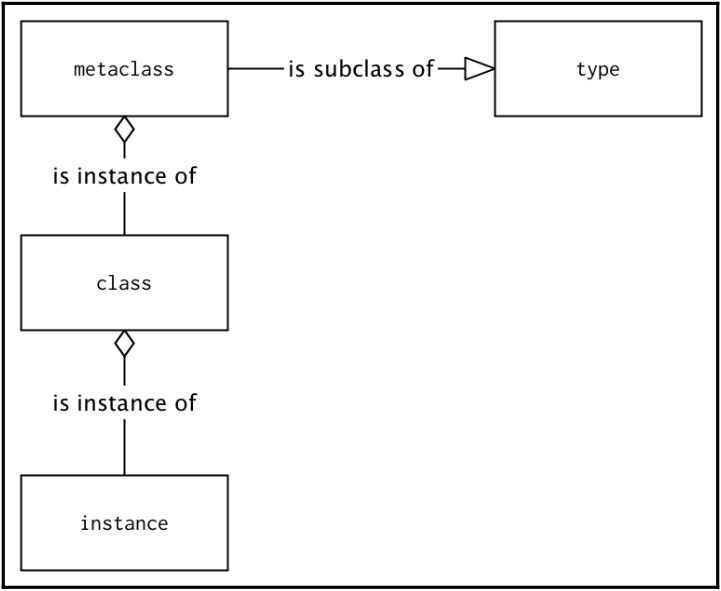

Metaclass is a type (class) that defines other types (classes). The most important thing to

know in order to understand how they work is that classes that define object instances are

objects too. So, if they are objects, then they have an associated class. The basic type of

every class definition is simply the built-in type class. Here is a simple diagram that should

make this clear:

In Python, it is possible to substitute the metaclass for a class object with our own type.

Usually, the new metaclass is still the subclass of the type class because

not doing so would make the resulting classes highly incompatible with other classes in

terms of inheritance:

5.1. The general syntax¶

The call to the built-in type() class can be used as a dynamic equivalent of the class

statement. The following is an example of a class definition with the type() call:

def method(self):

return 1

MyClass = type('MyClass', (object,), {'method': method})

This is equivalent to the explicit definition of the class with the class keyword:

class MyClass:

def method(self):

return 1

Every class that’s created with the class statement implicitly uses type as its metaclass. This

default behavior can be changed by providing the metaclass keyword argument to the

class statement, as follows:

class ClassWithAMetaclass(metaclass=type):

pass

The value that’s provided as a metaclass argument is usually another class object, but it

can be any other callable that accepts the same arguments as the type class and is expected

to return another class object. The call signature is type(name, bases, namespace) and

the meaning of the arguments are as follows:

name: This is the name of the class that will be stored in the__name__attributebases: This is the list of parent classes that will become the__bases__attribute and will be used to construct the MRO of a newly created classnamespace: This is a namespace (mapping) with definitions for the class body that will become the__dict__attribute

One way of thinking about metaclasses is the __new__() method, but at a higher level of

class definition.

Despite the fact that functions that explicitly call type() can be used in place of

metaclasses, the usual approach is to use a different class that inherits from type for this

purpose. The common template for a metaclass is as follows:

class Metaclass(type):

def __new__(mcs, name, bases, namespace):

return super().__new__(mcs, name, bases, namespace)

@classmethod

def __prepare__(mcs, name, bases, **kwargs):

return super().__prepare__(name, bases, **kwargs)

def __init__(cls, name, bases, namespace, **kwargs):

super().__init__(name, bases, namespace)

def __call__(cls, *args, **kwargs):

return super().__call__(*args, **kwargs)

The name, bases, and namespace arguments have the same meaning as in the type() call

we explained earlier, but each of these four methods can have the following different

purposes:

__new__(mcs, name, bases, namespace): This is responsible for the actual creation of the class object in the same way as it does for ordinary classes. The first positional argument is a metaclass object. In the preceding example, it would simply be aMetaclass. Note thatmcsis the popular naming convention for this argument.__prepare__(mcs, name, bases, **kwargs): This creates an empty namespace object. By default, it returns an emptydict, but it can be overridden to return any other mapping type. Note that it does not acceptnamespaceas an argument because, before calling it, the namespace does not exist.__init__(cls, name, bases, namespace, **kwargs): This is not seen popularly in metaclass implementations but has the same meaning as in ordinary classes. It can perform additional class object initialization once it is created with__new__(). The first positional argument is now namedclsby convention to mark that this is already a created class object (metaclass instance) and not a metaclass object. When__init__()was called, the class was already constructed and so this method can do less things than the__new__()method. Implementing such a method is very similar to using class decorators, but the main difference is that__init__()will be called for every subclass, while class decorators are not called for subclasses.__call__(cls, *args, **kwargs): This is called when an instance of a metaclass is called. The instance of a metaclass is a class object; it is invoked when you create new instances of a class. This can be used to override the default way of how class instances are created and initialized.

Each of the preceding methods can accept additional extra keyword arguments, all of

which are represented by **kwargs. These arguments can be passed to the metaclass object

using extra keyword arguments in the class definition in the form of the following code:

class Klass(metaclass=Metaclass, extra="value"):

pass

This amount of information can be overwhelming at the beginning without proper

examples, so let’s trace the creation of metaclasses, classes, and instances with

some print() calls:

class RevealingMeta(type):

def __new__(mcs, name, bases, namespace, **kwargs):

print(mcs, "__new__ called")

return super().__new__(mcs, name, bases, namespace)

@classmethod

def __prepare__(mcs, name, bases, **kwargs):

print(mcs, "__prepare__ called")

return super().__prepare__(name, bases, **kwargs)

def __init__(cls, name, bases, namespace, **kwargs):

print(cls, "__init__ called")

super().__init__(name, bases, namespace)

def __call__(cls, *args, **kwargs):

print(cls, "__call__ called")

return super().__call__(*args, **kwargs)

Using RevealingMeta as a metaclass to create a new class definition will give the

following output in the Python interactive session:

>>> class RevealingClass(metaclass=RevealingMeta):

... def __new__(cls):

... print(cls, "__new__ called")

... return super().__new__(cls)

... def __init__(self):

... print(self, "__init__ called")

... super().__init__()

...

<class 'RevealingMeta'> __prepare__ called

<class 'RevealingMeta'> __new__ called

<class 'RevealingClass'> __init__ called

>>> instance = RevealingClass()

<class 'RevealingClass'> __call__ called <class 'RevealingClass'> __new__

called <RevealingClass object at 0x1032b9fd0> __init__ called

5.2. Metaclass usage¶

Metaclasses, once mastered, are a powerful feature, but always complicate the code. Metaclasses also do not compose well and you’ll quickly run into problems if you try to mix multiple metaclasses through inheritance.

For simple things, like changing the read/write attributes or adding new ones, metaclasses can be avoided in favor of simpler solutions, such as properties, descriptors, or class decorators.

But there are situations where things cannot be easily done without them. For instance, it is hard to imagine Django’s ORM implementation built without extensive use of metaclasses. It could be possible, but it is rather unlikely that the resulting solution would be similarly easy to use. Frameworks are the place where metaclasses really shine. They usually have a lot of complex internal code that is not easy to understand and follow, but eventually allow other programmers to write more condensed and readable code that operates on a higher level of abstraction.

5.3. Metaclass pitfalls¶

Like some other advanced Python features, the metaclasses are very elastic and can be easily abused. While the call signature of the class is rather strict, Python does not enforce the type of the return parameter. It can be anything as long as it accepts incoming arguments on calls and has the required attributes whenever it is needed.

One such object that can be anything-anywhere is the instance of the Mock class that’s

provided in the unittest.mock module. Mock is not a metaclass and also does not inherit

from the type class. It also does not return the class object on instantiating. Still, it can be

included as a metaclass keyword argument in the class definition, and this will not raise

any syntax errors. Using Mock as a metaclass is, of course, complete nonsense, but let’s

consider the following example:

>>> from unittest.mock import Mock

>>> class Nonsense(metaclass=Mock):

... pass

...

>>> Nonsense

<Mock spec='str' id='4327214664'>

# pointless, but illustrative

It’s not hard to predict that any attempt to instantiate our Nonsense pseudo-class will fail. What is really interesting is the following exception and traceback you’ll get trying to do so:

>>> Nonsense()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File

"/Library/Frameworks/Python.framework/Versions/3.5/lib/python3.5/unittest/mock.py", line 917, in __call__

return _mock_self._mock_call(*args, **kwargs)

File

"/Library/Frameworks/Python.framework/Versions/3.5/lib/python3.5/unittest/mock.py", line 976, in _mock_call

result = next(effect)

StopIteration

Does the StopIteration exception give you any clue that there may be a problem with

our class definition on the metaclass level? Obviously not. This example illustrates how

hard it may be to debug metaclass code if you don’t know where to look for errors.

6. Code generation¶

As we already mentioned, the dynamic code generation is the most difficult approach to metaprogramming. There are tools in Python that allow you to generate and execute code or even do some modifications to the already compiled code objects.

Various projects such as Hy show that even whole languages can be reimplemented in Python using code generation techniques. This proves that the possibilities are practically limitless. Knowing how vast this topic is and how badly it is riddled with various pitfalls, I won’t even try to give detailed suggestions on how to create code this way, or to provide useful code samples.

Anyway, knowing what is possible may be useful for you if you plan to study this field deeper by yourself. So, treat this section only as a short summary of possible starting points for further learning.

6.1. exec, eval and compile¶

Python provides the following three built-in functions to manually execute, evaluate, and compile arbitrary Python code:

exec(object, globals, locals): This allows you to dynamically execute the Python code.objectshould be a string or code object (see thecompile()function) representing a single statement or sequence of multiple statements. Theglobalsandlocalsarguments provide global and local namespaces for the executed code and are optional. If they are not provided, then the code is executed in the current scope. If provided,globalsmust be a dictionary, whilelocalsmight be any mapping object; it always returnsNone.eval(expression, globals, locals): This is used to evaluate the given expression by returning its value. It is similar toexec(), but it expectsexpressionto be a single Python expression and not a sequence of statements. It returns the value of the evaluated expression.compile(source, filename, mode): This compiles the source into the code object or AST object. The source code is provided as a string value in thesourceargument. The filename should be the file from which the code was read. If it has no file associated (for example, because it was created dynamically), then<string>is the value that is commonly used. Mode should be eitherexec(sequence of statements),eval(single expression), orsingle(a single interactive statement, such as in a Python interactive session).

The exec() and eval() functions are the easiest to start with when trying to dynamically

generate code because they can operate on strings. If you already know how to program in

Python, then you may already know how to correctly generate working source code

programmatically.

The most useful in the context of metaprogramming is obviously exec() because it allows

you to execute any sequence of Python statements. The word any should be alarming for

you. Even eval(), which allows only evaluation of expressions in the hands of a skillful

programmer (when fed with the user input), can lead to serious security holes. Note that

crashing the Python interpreter is the scenario you should be least afraid of. Introducing

vulnerability to remote execution exploits due to irresponsible use

of exec() and eval() can cost you your image as a professional developer, or even your

job.

Even if used with a trusted input, there is a list of little details

about exec() and eval() that is too long to be included here, but might affect how your

application works in ways you would not expect. Armin Ronacher has a good article that

lists the most important of them, titled “Be careful with exec and eval” in Python (refer

to http://lucumr.pocoo.org/2011/2/1/exec-in-python/ ).

Despite all these frightening warnings, there are natural situations where the usage

of exec() and eval() is really justified. Still, in the case of even the tiniest doubt, you

should not use them and try to find a different solution.

Tip

The signature of the eval() function might make you think that if you

provide empty globals and locals namespaces and wrap it with

proper try … except statements, then it will be reasonably safe. There

could be nothing more wrong. Ned Batcheler has written a very good

article in which he shows how to cause an interpreter segmentation fault

in the eval() call, even with erased access to all Python built-ins

(see http://nedbatchelder.com/blog/201206/eval_really_is_dangerous.html ).

This is single proof that both exec() and eval() should never

be used with untrusted input.

6.2. Abstract syntax tree (AST)¶

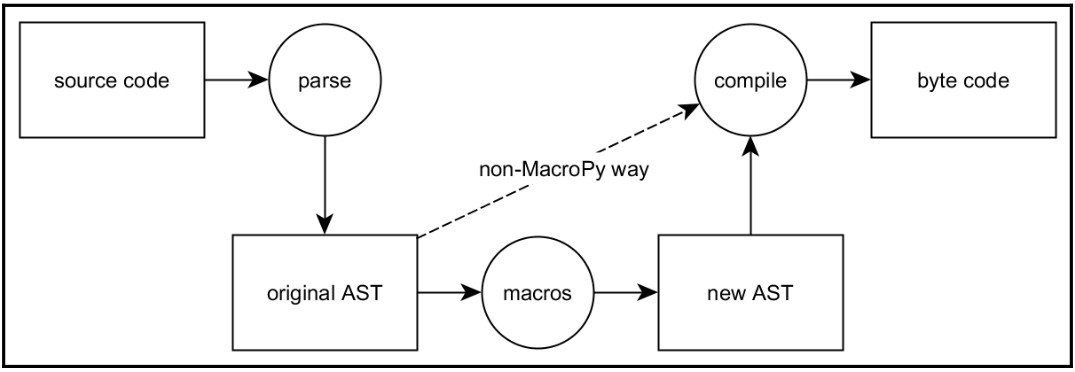

The Python syntax is converted into AST before it is compiled into byte code. This is a tree

representation of the abstract syntactic structure of the source code. Processing of Python

grammar is available thanks to the built-in ast module. Raw ASTs of Python code can be

created using the compile() function with the ast.PyCF_ONLY_AST flag, or by using

the ast.parse() helper. Direct translation in reverse is not that simple and there is no

function provided in the standard library that can do so. Some projects, such as PyPy, do

such things though.

The ast module provides some helper functions that allow you to work with the AST, for

example:

>>> tree = ast.parse('def hello_world(): print("hello world!")')

>>> tree

<_ast.Module object at 0x00000000038E9588>

>>> ast.dump(tree)

"Module(

body=[

FunctionDef(

name='hello_world',

args=arguments(

args=[],

vararg=None,

kwonlyargs=[],

kw_defaults=[],

kwarg=None,

defaults=[]

),

body=[

Expr(

value=Call(

func=Name(id='print', ctx=Load()),

args=[Str(s='hello world!')],

keywords=[]

)

)

],

decorator_list=[],

returns=None

)

]

)"

The output of ast.dump() in the preceding example was reformatted to increase the

readability and better show the tree-like structure of the AST. It is important to know that

the AST can be modified before being passed to compile(). This gives you many new

possibilities. For instance, new syntax nodes can be used for additional instrumentation,

such as test coverage measurement. It is also possible to modify the existing code tree in

order to add new semantics to the existing syntax. Such a technique is used by the MacroPy

project (https://github.com/lihaoyi/macropy)

to add syntactic macros to Python using the already existing syntax:

AST can also be created in a purely artificial manner, and there is no need to parse any source at all. This gives Python programmers the ability to create Python bytecode for custom domain-specific languages, or even completely implement other programming languages on top of Python VMs.

6.2.1. Import hooks¶

Taking advantage of MacroPy’s ability to modify original ASTs would be as easy as using

the import macropy.activate statement if it could somehow override the Python

import behavior. Fortunately, Python provides a way to intercept imports using the

following two kinds of import hooks:

Meta hooks: These are called before any other

importprocessing has occurred. Using meta hooks, you can override the way in whichsys.pathis processed for even frozen and built-in modules. To add a new meta hook, a new meta path finder object must be added to thesys.meta_pathlist.Import path hooks: These are called as part of

sys.pathprocessing. They are used if the path item associated with the given hook is encountered. The import path hooks are added by extending thesys.path_hookslist with a new path finder object.

The details of implementing both path finders and meta path finders are extensively implemented in the official Python documentation (see https://docs.python.org/3/reference/import.html ). The official documentation should be your primary resource if you want to interact with imports on that level. This is so because import machinery in Python is rather complex and any attempt to summarize it in a few paragraphs would inevitably fail. Here, we just noted that such things are possible.

6.3. Projects that use code generation patterns¶

It is hard to find a really usable implementation of the library that relies on code generation patterns that is not only an experiment or simple proof of concept. The reasons for that situation are fairly obvious:

Deserved fear of the

exec()andeval()functions because, if used irresponsibly, they can cause real disastersSuccessful code generation is very difficult to develop and maintain because it requires a deep understanding of the language and exceptional programming skills in general

Despite these difficulties, there are some projects that successfully take this approach either to improve performance or achieve things that would be impossible by other means.

6.3.1. Falcon’s compiled router¶

Falcon ( http://falconframework.org/ ) is a minimalist Python WSGI web framework for building fast and lightweight APIs. It strongly encourages the REST architectural style that is currently very popular around the web. It is a good alternative to other rather heavy frameworks, such as Django or Pyramid. It is also a strong competitor to other micro- frameworks that aim for simplicity, such as Flask, Bottle, or web2py.

One of its features is it’s very simple routing mechanism. It is not as complex as the routing

provided by Django urlconf and does not provide as many features, but in most cases is

just enough for any API that follows the REST architectural design. What is most

interesting about Falcon’s routing is the internal construction of that router. Falcon’s router

is implemented using the code generated from the list of routes, and code changes every

time a new route is registered. This is the effort that’s needed to make routing fast.

Consider this very short API example, taken from Falcon’s web documentation:

import falcon

import json

class QuoteResource:

def on_get(self, req, resp):

"""Handles GET requests"""

quote = {

'quote': 'I\'ve always been more interested in '

'the future than in the past.',

'author': 'Grace Hopper'

}

resp.body = json.dumps(quote)

api = falcon.API()

api.add_route('/quote', QuoteResource())

In short, the highlighted call to the api.add_route() method updates dynamically the

whole generated code tree for Falcon’s request router. It also compiles it using

the compile() function and generates the new route-finding function using eval(). Let’s

take a closer look at the following __code__ attribute of

the api._router._find() function:

>>> api._router._find.__code__

<code object find at 0x00000000033C29C0, file "<string>", line 1>

>>> api.add_route('/none', None)

>>> api._router._find.__code__

<code object find at 0x00000000033C2810, file "<string>", line 1>

This transcript shows that the code of this function was generated from the string and not

from the real source code file (the “<string>” file). It also shows that the actual code

object changes with every call to the api.add_route() method (the object’s address in

memory changes).

6.3.2. Hy¶

Hy (http://docs.hylang.org/) is the dialect of Lisp, and is written entirely in Python. Many similar projects that implement other code in Python usually try only to tokenize the plain form of code that’s provided either as a file-like object or string and interpret it as a series of explicit Python calls. Unlike others, Hy can be considered as a language that runs fully in the Python runtime environment, just like Python does. Code written in Hy can use the existing built-in modules and external packages and vice-versa. Code written with Hy can be imported back into Python.

To embed Lisp in Python, Hy translates Lisp code directly into Python AST. Import

interoperability is achieved using the import hook that is registered once the Hy module is

imported into Python. Every module with the .hy extension is treated as the Hy module

and can be imported like the ordinary Python module. The following is a hello world

program written in this Lisp dialect:

;; hyllo.hy

(defn hello [] (print "hello world"))

It can be imported and executed with the following Python code:

>>> import hy

>>> import hyllo

>>> hyllo.hello()

hello world

If we dig deeper and try to disassemble hyllo.hello using the built-in dis module, we

will notice that the byte code of the Hy function does not differ significantly from its pure

Python counterpart, as shown in the following code:

>>> import dis

>>> dis.dis(hyllo.hello)

2 0 LOAD_GLOBAL 0 (print)

3 LOAD_CONST 1 ('hello world!')

6 CALL_FUNCTION 1 (1 positional, 0 keyword pair)

9 RETURN_VALUE

>>> def hello(): print("hello world!")

...

>>> dis.dis(hello)

1 0 LOAD_GLOBAL 0 (print)

3 LOAD_CONST 1 ('hello world!')

6 CALL_FUNCTION 1 (1 positional, 0 keyword pair)

9 POP_TOP 10 LOAD_CONST

0 (None) 13 RETURN_VALUE